Kubernetes config file examples

Pod + ConfigMap + Service

Run a website with a green background

Run the following pod, config map and service and check that it’s running:

kubectl create -f pod.yaml

kubectl get pods,svcapiVersion: v1 # fixate kubernetes API version

kind: Pod # define a pod

metadata:

name: green

labels:

app: green # give pod label with key 'app' and value 'green'

spec:

containers:

- name: nginx # add one container named 'nginx'

image: nginx:1.21.6

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html # place contents of volume in this path in the running container

volumes:

- name: html

configMap:

name: green

items: # map config map: instead of filename `body`, `index.html` will be used.

- key: body

path: index.html

---

apiVersion: v1

kind: ConfigMap # define key-value pair to be used as data file

metadata:

name: green

data:

body: <body style="background-color:green;"></body>

---

apiVersion: v1

kind: Service # define service which exposes running container on port 80

metadata:

name: green

spec:

selector:

app: green

ports:

- port: 80Jobs

Job

apiVersion: batch/v1

kind: Job

metadata:

name: my-job

spec:

completions: 10 # Run 10 times

parallelism: 5 # Run 5 in parallel

template:

spec:

containers:

- name: alpine

image: alpine:3.16.0

command: ["sleep"]

args: ["10"] # job takes 10 seconds.

restartPolicy: NeverCron Job

Run action repeatedly. For each run of cron job a new pod starts and old ones get deleted (dependening on successfulJobsHistoryLimit value)

apiVersion: batch/v1

kind: CronJob

metadata:

name: my-cronjob

spec:

schedule: "*/1 * * * *" # Runs every minute

jobTemplate:

spec:

template:

spec:

containers:

- name: alpine

image: alpine:3.16.0

command: ["/bin/sh"]

args: ["-c", "date"]

restartPolicy: OnFailure

successfulJobsHistoryLimit: 2 # after completion, keep two jobs so that their logs may be observed- Run

watch -n 1 kubectl get cronjobs,podsto watch changes - Run

kubectl create -f cronjob.yamlto start the cronjob.

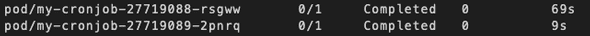

You’ll see something like this:

- Cleanup via

kubectl delete cronjob my-cronjob(orkubectl delete cronjob --all)

Simple deployment

Run deployment and scale

- Run kubernetes via

minikubeand start a watcher viawatch -n 1 kubectl get podsin a terminal window. - Create deployment

kubectl create deployment host-logger --image=trimhall/my-example:latest Image trimhall/my-example is an adaptation of the hello-world image.

- Expose the running app (

3000is the internal port number)

kubectl expose deployment host-logger--type=NodePort --port=3000- Run service in browser

minikube service host-logger This will open up the application in your browser on an url similar to http://192.168.49.2:31601/ with the contentas

Hello World! this application is running on host-logger-6667d64984-ln8wl- Scale your app up

kubectl scale deployments/host-logger --replicas=10And scale it down

kubectl scale deployments/host-logger --replicas=5Auto healing

Let’s destroy a running container and see how kubernetes will self-heal by spining up a new container to establish the (declared) desired state.

-

Exec into the running

minikubedocker container (which hosts the kubernetes cluster; it’s name might be similar togcr.io/k8s-minikube/kicbase:v0.0.34)- Run

docker ps - Use the first 3-4 alphanumeric characters (letters or digits) of the container and run

docker exec -it <FIRST_LETTERS_OF_CONTAINER_ID> /bin/sh

- Run

-

The minikube container is itself running all the spun up kubernetes entities as docker containers (inception 😉)

- Run

docker ps | grep my-exampleto see the spun up containers. - Use the first few characters to kill one of the containers, e.g.

docker rm -f 8db - Observe the watcher: You’ll watch live auto-healing

- Run

Discuss on Twitter ● Improve this article: Edit on GitHub